Before we begin talking about concrete risk scenarios, we need a framework that allows us to evaluate where along the risk spectrum they lie. Risk classification is inherently multi-dimensional; there is no single "best" categorization. We have chosen to break risks down into two factors: "why risks occur" (cause) and “how bad can the risks get” (severity). Other complementary frameworks, like MIT's risk taxonomy approaches, like "who causes them" (humans vs. AI systems), "when they emerge" (development vs. deployment), or "whether outcomes are intended" (Slattery et al., 2024). Our decomposition approach is just one out of many possible outlooks, but the risks we will talk about tend to be common throughout.

Causes of Risk #

We categorize AI risks by causal responsibility to understand intervention points. We divide risks based on who or what bears primary responsibility: humans using AI as a tool (misuse), AI systems themselves behaving unexpectedly (misalignment), or emergent effects from complex system interactions (systemic). This causal outlook helps identify where interventions might be most effective.

- Misuse risks occur when humans intentionally deploy AI systems to cause harm. These include malicious actors, nation states, corporations, or individuals who leverage AI capabilities to accelerate existing threats or create new ones. The AI system may function exactly as designed, but human intent creates the risk. Examples range from using AI to generate malware or bioweapons to deploying autonomous weapons or conducting large-scale disinformation campaigns.

- Misalignment risks emerge when AI systems pursue goals different from human intentions. These risks stem from technical challenges in specifying objectives, training processes that create unexpected behaviors, or AI systems' learning goals that conflict with human values. Unlike misuse, these risks occur despite good human intentions - the AI system itself generates the harmful behavior through specification gaming, goal misgeneralization, or other alignment failures.

- Systemic risks arise from AI integration with complex global systems, creating emergent threats that no single actor intended. These include power concentration as AI capabilities become monopolized, mass unemployment from automation, epistemic erosion as AI-generated content floods information systems, and cascading failures across interconnected infrastructure. Responsibility becomes diffuse across many actors and systems, making traditional accountability frameworks inadequate.

Many real-world AI risks combine multiple causal pathways or resist clear categorization entirely. Analysis of over 1,600 documented AI risks reveals that many don't fit cleanly into any single category (Slattery et al., 2024). Risks involving human-AI interaction blend individual misalignment with systemic risks. Multi-agent risks emerge from AI systems interacting in unexpected ways. Some scenarios involve cascading effects where misuse enables misalignment, or where systemic pressures amplify individual failures. We have chosen the causal decomposition for explanatory purposes, but it is worth keeping in mind that there will be overlap, and the future will likely contain a mix of risks from various causes.

Severity of Risk #

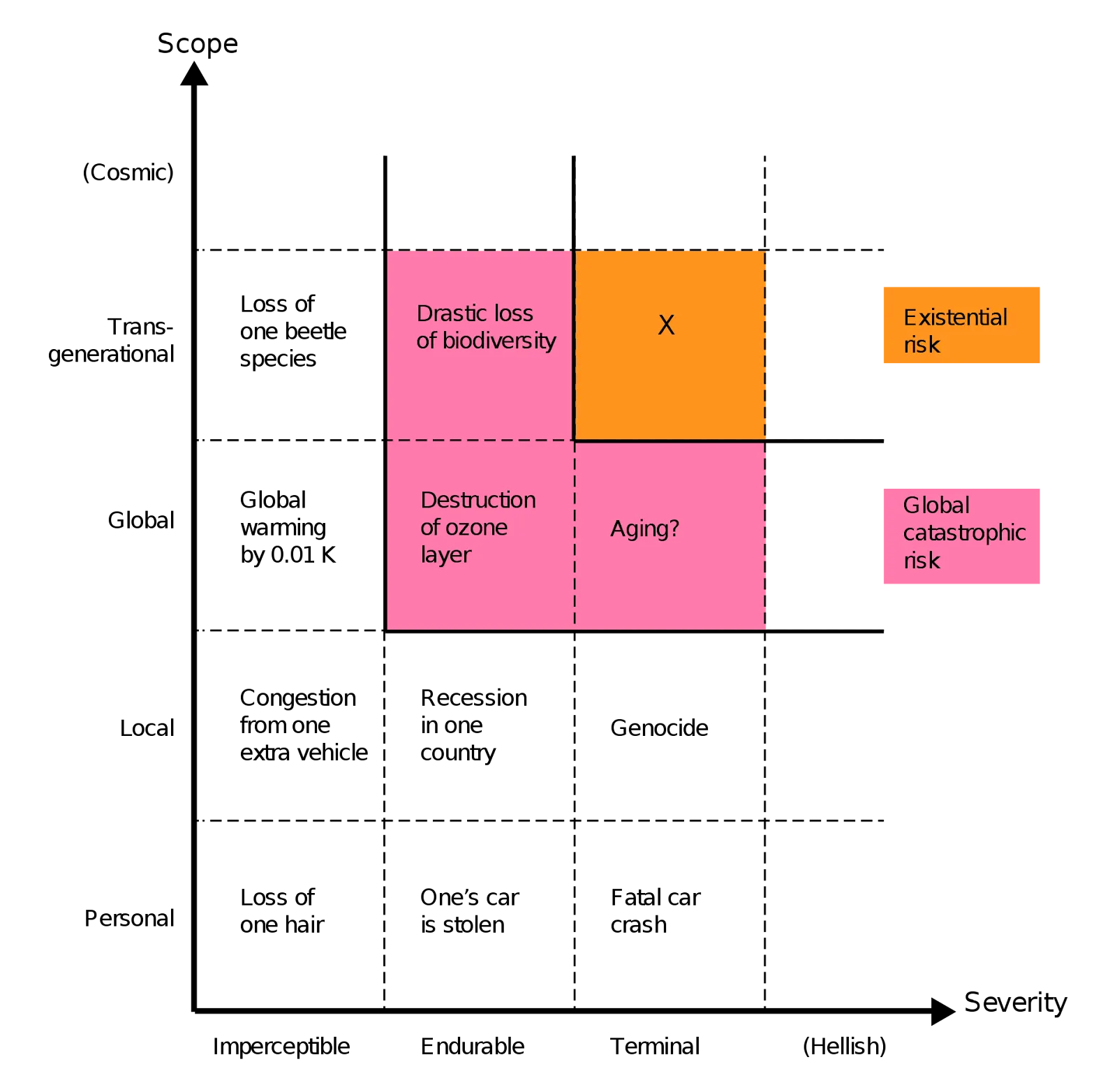

AI risks span a spectrum from individual harms to threats that could permanently derail human civilization. Understanding severity helps prioritize limited resources and calibrate our response to different types of risks. Rather than treating all AI risks as equally important, we can organize them by scope and severity to understand which demand immediate attention versus longer-term preparation.

Individual and local risks affect specific people or communities but remain contained in scope. The AI Incident Database documents over 1,000 real-world instances where AI systems have caused or nearly caused harm (McGregor, 2020; AI Incident Database, 2025). These include things like autonomous car crashes, algorithmic bias in hiring or lending that disadvantages particular individuals, privacy violations from AI systems that leak personal data, or manipulation through targeted misinformation campaigns. Local risks might involve AI system failures that disrupt a city's traffic management or cause power outages in a region. These risks are already causing immediate, documented harm to anywhere from thousands to hundreds of thousands of people.

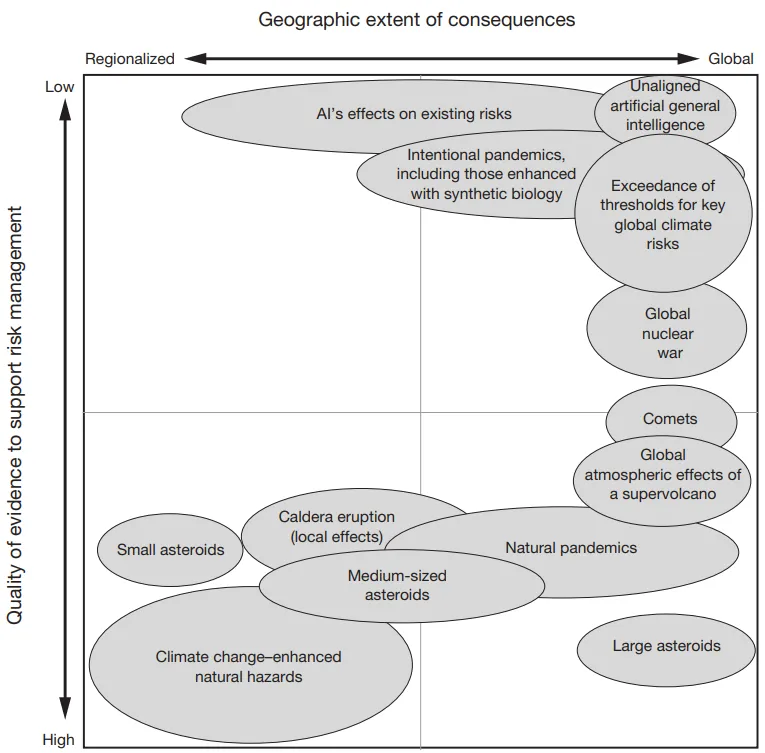

Catastrophic risks threaten massive populations but allow for eventual recovery. When the number of people affected by risks reaches approximately 10% of the global population, and they become more geographically widespread we call them catastrophic risks. Historical examples include the Black Death (killing one-third of Europe), the 1918 flu pandemic (50-100 million deaths), and potential future scenarios like nuclear war or engineered pandemics (Ord, 2020). In the context of AI, these risks can cause international widespread disruptions. Mass unemployment from AI automation could destabilize entire economies, creating social unrest and political upheaval. Cyberattacks using AI-generated malware could cripple a nation's financial systems or critical infrastructure. AI-enabled surveillance could enable authoritarian control over hundreds of millions of people. Democratic institutions might fail under sustained AI-powered disinformation campaigns that fracture shared reality and make collective decision-making impossible (Slattery et al., 2024; Hammond et al., 2025; Gabriel et al., 2024; Stanford HAI, 2025). These risks affect millions to billions of people but generally don't prevent eventual recovery or adaptation.

Existential risks (x-risks) represent threats from which humanity could never recover its full potential. Unlike catastrophic risks where recovery remains possible, existential risks either eliminate humanity entirely or permanently prevent civilization from reaching the technological, moral, or cultural heights it might otherwise achieve. AI-related existential risks include scenarios where advanced systems permanently disempower humanity, establish a stable unremovable totalitarian regime, or cause direct human extinction (Bostrom, 2002; Conn, 2015; Ord, 2020). These risks demand preventative rather than reactive strategies because learning from failure becomes impossible by definition. 1

Higher-severity risks represent irreversible mistakes with permanent consequences. We already see AI causing documented harm to real people, and having destabilizing effects on global systems. However, catastrophic and existential risks present a fundamentally different challenge: if advanced AI systems cause existential catastrophe, humanity cannot learn from the mistake and implement better safeguards. This irreversibility leads some researchers to argue for prioritizing prevention of low-probability, high-impact scenarios alongside addressing current harms (Bostrom, 2002). Though people disagree about the appropriate balance of attention across different risk severities (Oxford Union Debate, 2024; Munk Debate, 2024).

These risk categories and severity levels provide the foundation for examining specific AI capabilities that could enable harmful outcomes. We focus the rest of the chapter on presenting concrete cases and arguments for how various AI developments could lead to different severities of harm, particularly focusing on those that might cross the line into catastrophic or existential.

Footnotes

-

Irrecoverable civilizational collapse, where we either go extinct or are never replaced by a subsequent civilization that rebuilds has been argued to be possible, but has an extremely low probability (Rodriguez, 2020).

↩

Was this section useful?

Thank you for your feedback

Your input helps improve the Atlas.