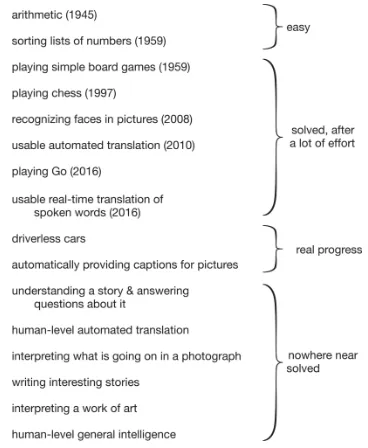

AI models can write, reason, code, generate media, and control robots—often matching or surpassing expert human performance on specific tasks. In this first section, we’ll try to give you a sense of what AI is actually capable of doing as of late 2025. Numbers and graphs don't really make these capabilities tangible, so we will try to include as many videos, images, and examples to really help you get a sense of where AI currently stands. But in case you are interested, we also mention benchmark scores that measure progress quantitatively.

The trajectory matters more than a snapshot. This section can serve both as a quick history and as a snapshot of current capabilities. As you read through it, try to keep in mind how quickly we got here. Language generation went from coherent paragraphs to research assistants in a few years. Image generation went from laughable to professional-grade in a decade. Video generation seems to be following a similar path compressed into three years.

If you’re already familiar with AI or machine learning , some of these stories might be review for you, but hopefully everyone who reads will be able to take away at least one new thing from this section.

Games #

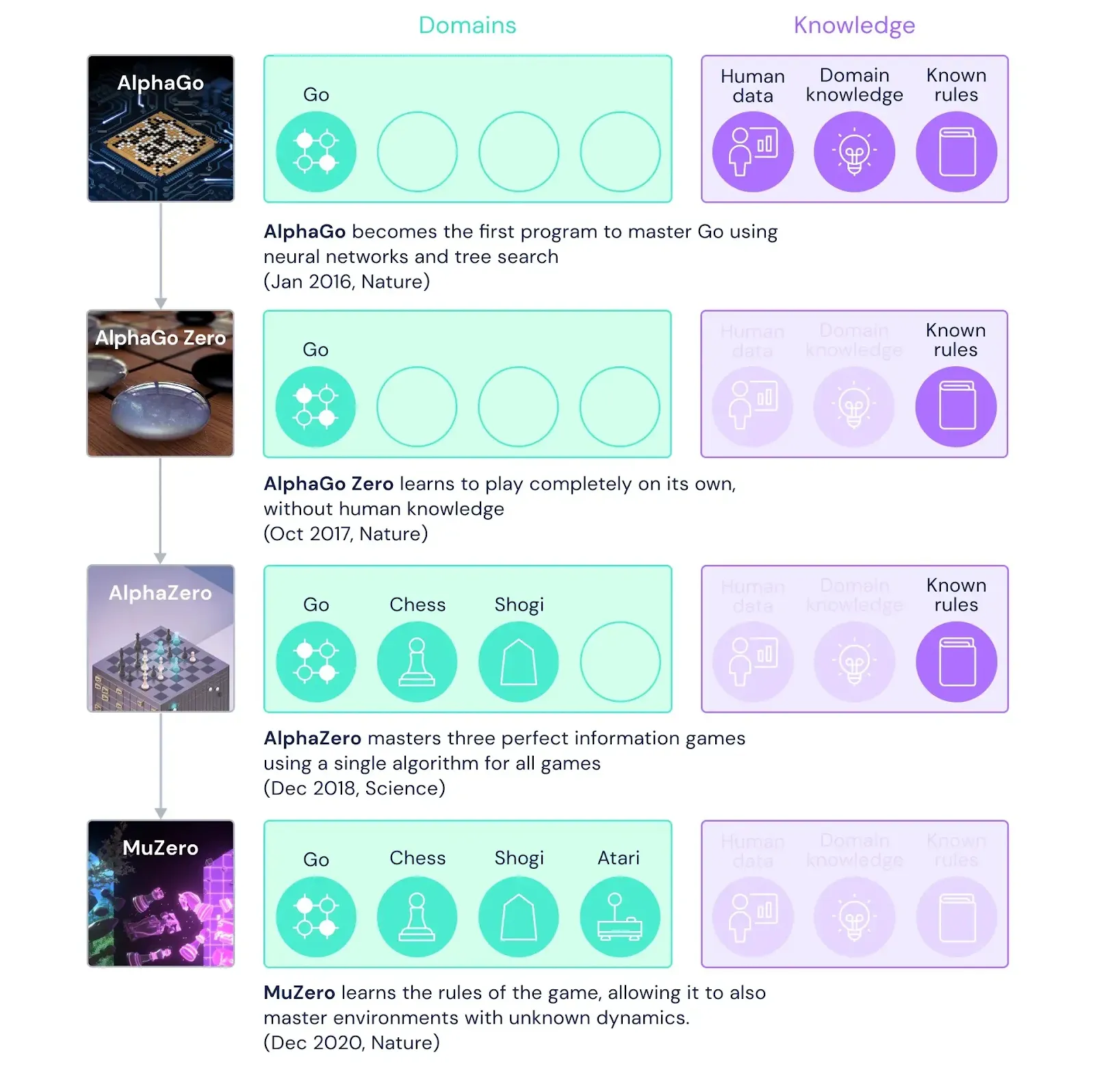

Game-playing AI is already at the superhuman level for many games. Comparing AI and humans at games has been a common theme through the last few decades with AI making continuous progress. IBM’s Deep blue defeated chess grandmaster in 1997 (IBM, 2026), IBM’s Watson won overwhelmingly at Jeopardy! in 2011 (IBM, 2026), and AlphaGo managed to beat the Go world champion Lee Sedol in 2016 (DeepMind, 2016). AlphaGo was a landmark moment, because this is a game with more possible positions than atoms in the observable universe. During game 2, AlphaGo played Move 37, a move that had a 1 in 10,000 chance of being used. Commentators initially thought it was a mistake, instead it was the move that several rounds later led to winning the game. The move demonstrated what many consider to be sparks of genuine creativity that diverged from centuries of human play that the model was trained on.

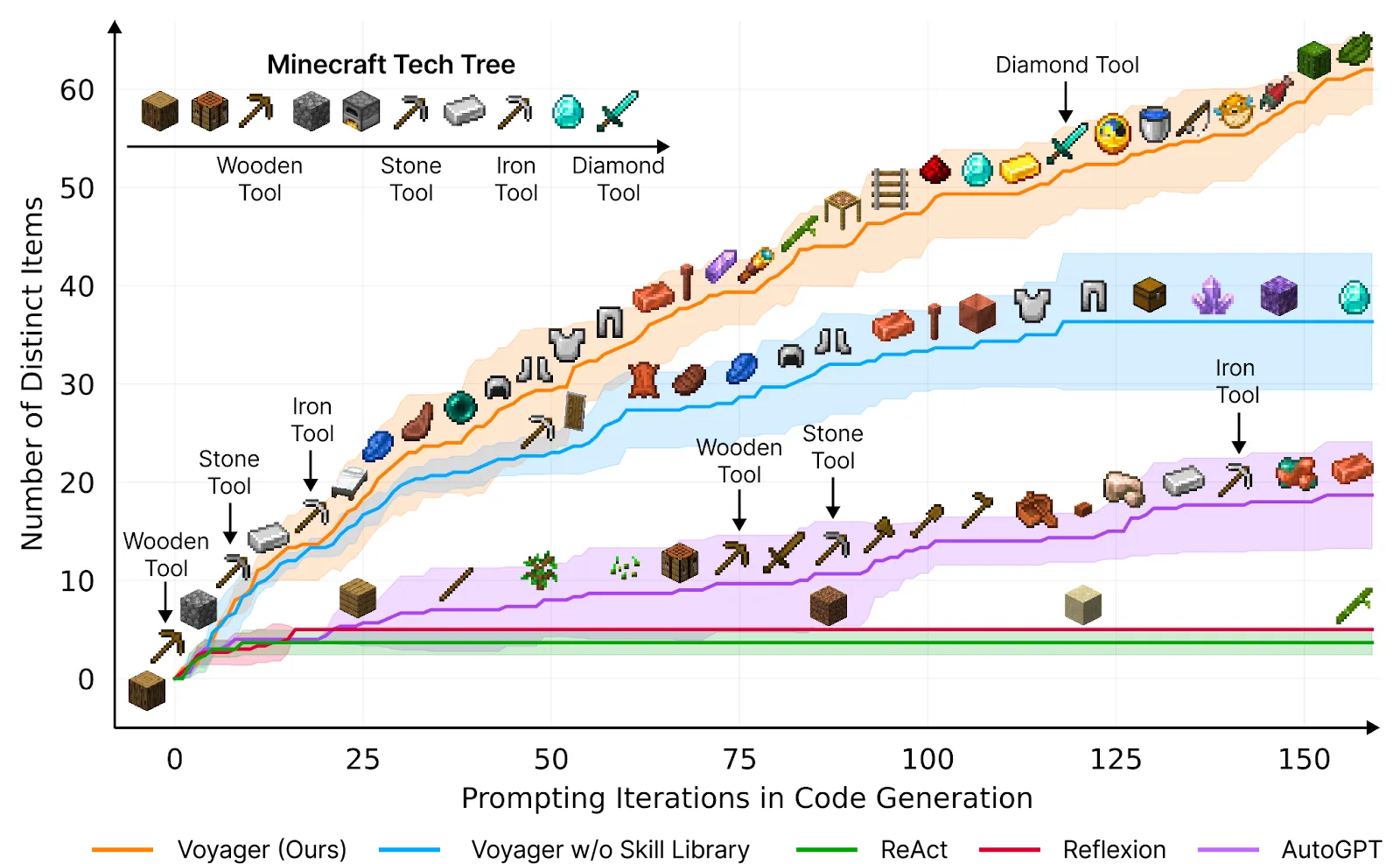

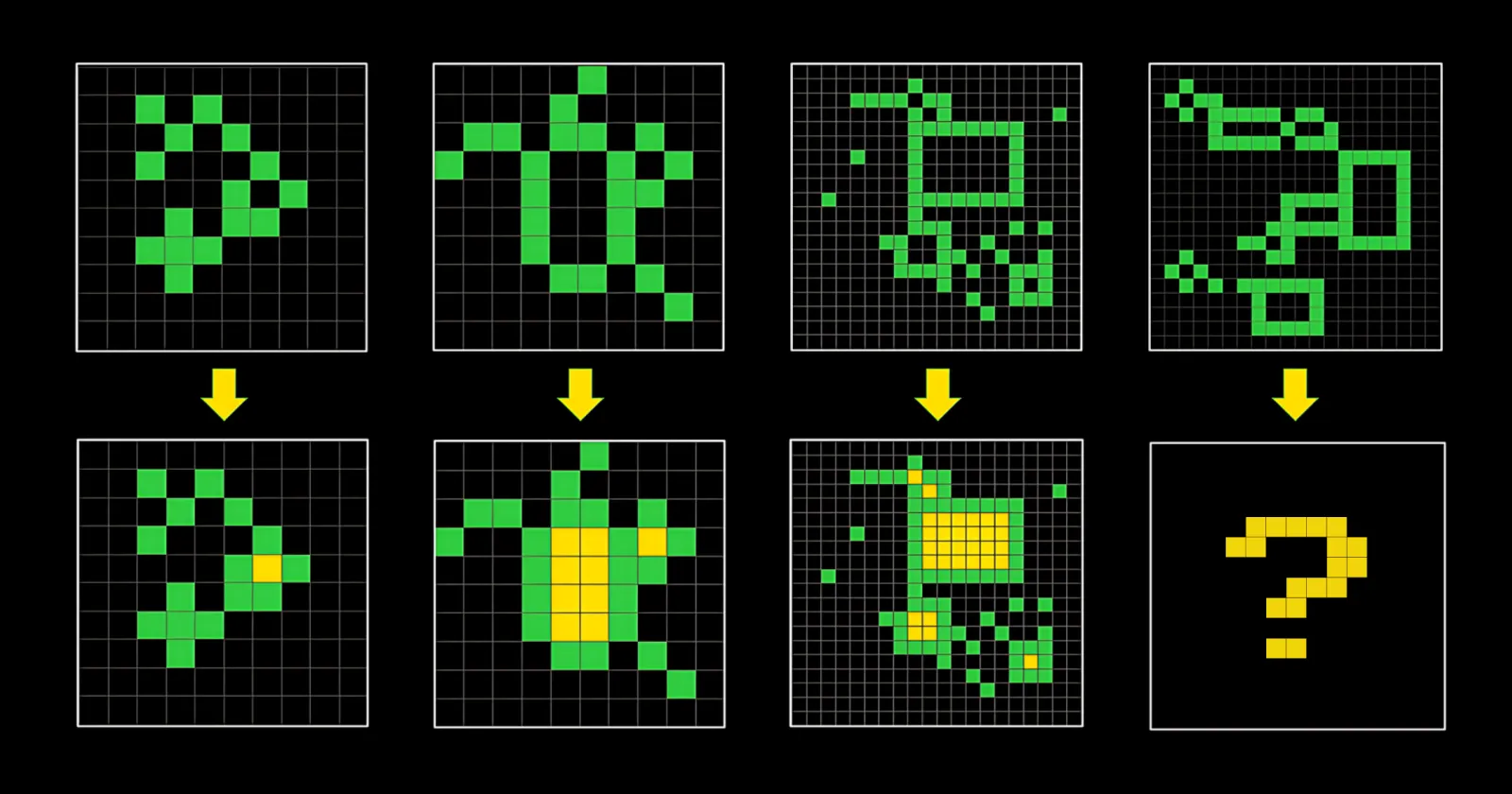

For the first time in the history of mankind, I saw something similar to an artificial intellect.

AI's superhuman game playing capability extends to video games. Machine learning techniques on simple Atari games in 2013 (Mnih et al., 2013) progressed to OpenAI Five defeating world champions at DOTA 2 in 2019 (OpenAI, 2019). That same year, DeepMind's AlphaStar beat professional esports players at StarCraft II (Google DeepMind, 2019). These are games with open ended real time environments, requiring thousands of rapid decisions and long-term planning. By 2020 the MuZero system played Atari games, Go, chess, and shogi without even being told the rules (Google DeepMind, 2020). 1 These are AIs that all play and learn autonomously without human intervention. In 2025, game playing AIs have evolved to open-ended environments across a huge variety of games. They carry the abilities learned from one game onto the next improving their performance over time (Google DeepMind, 2025).

Game playing AI is relatively narrow in what it can do. Despite this it is extremely impressive because of the strategic planning, pattern recognition, and adversarial thinking it displays. These same reasoning abilities—planning ahead, building strategies, adapting to feedback—that started with game playing now also apply to scientific research, mathematical proofs, and complex real-world problem-solving.

Text Generation #

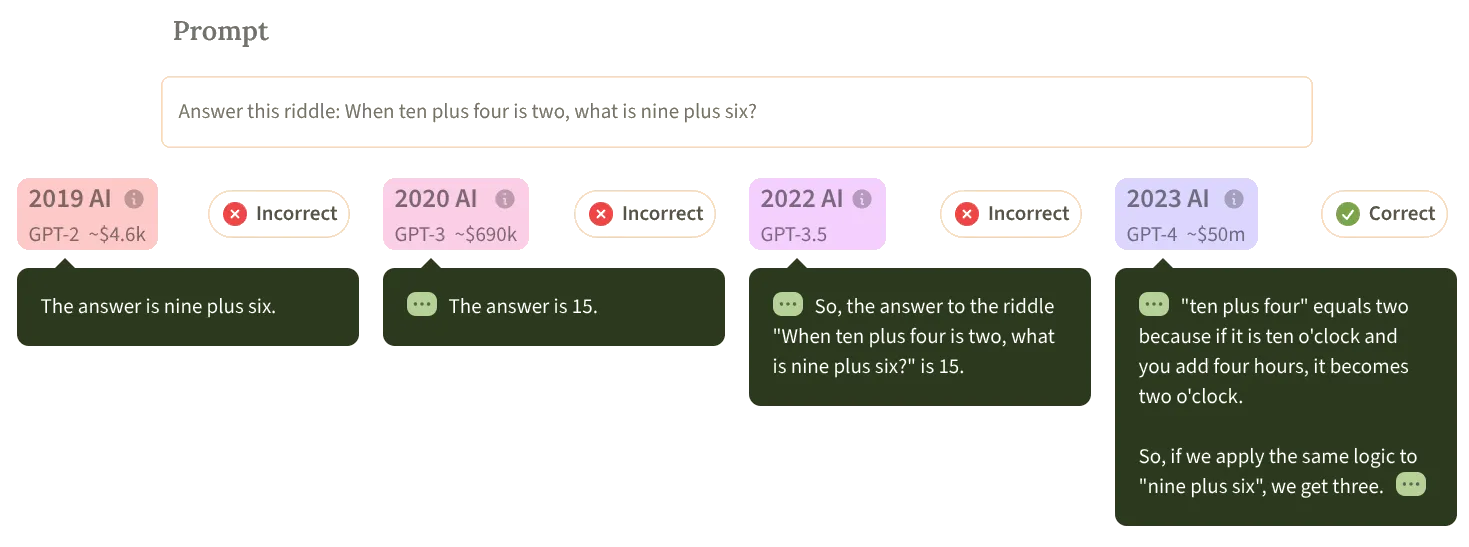

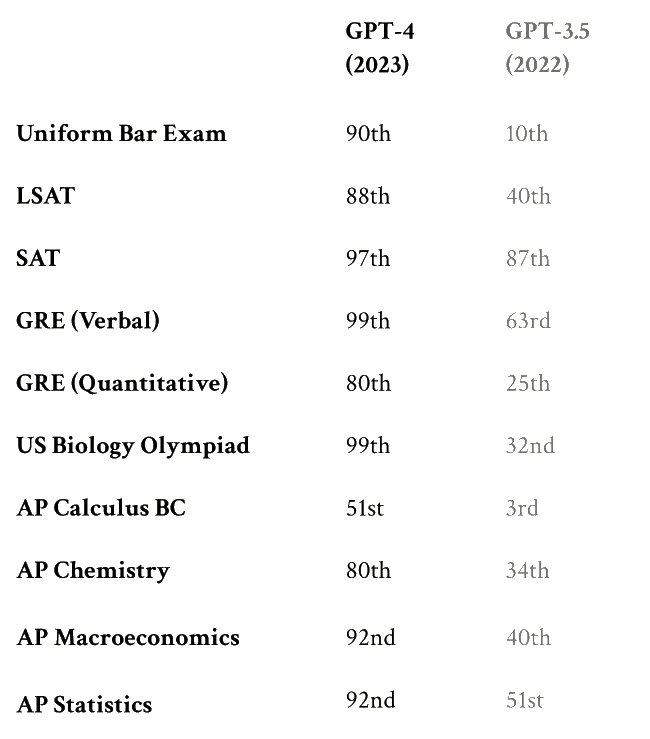

Generating text can take language models far beyond simple conversations. You're probably familiar with ChatGPT. These types of language generation AIs are what we call large language models (LLMs). In 2018, early versions of these LMs could only write a few coherent paragraphs. But over a few short years, they have gotten a lot better. By 2025, GPT-5 gets 92.5% of questions right in domains ranging from highly complex STEM fields, international law, to nutrition and religion (as measured by the MMLU benchmark). Models like Claude by Anthropic, Grok by X, GLM-4.7 by Zhipu AI, and DeepSeek-v3.2 showcased similar levels of performance across various domains (ArtificialAnalysis, 2025; EpochAI, 2025).

Language models have provided a core around which we have seen many impressive capabilities emerge like - scientific research, reasoning, and software development. All of these capabilities stem from the same principle of generating language and gradually refining it.

Tool Use #

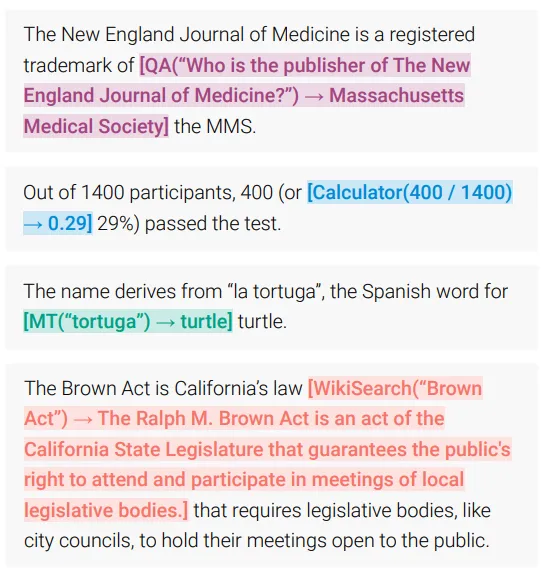

LLMs can intelligently use external tools, dramatically boosting performance. Language models in 2020 exhibited remarkable abilities to solve new tasks from instructions, but they used to struggle with basic functions like arithmetic. Instead of trying to get a single model to do everything, increasingly LLMs use external tools to achieve both capabilities (Schick et al., 2023; Qin et al., 2023). They recognize when they need a calculator, code interpreter, or up to date information from a search engine—and call these tools appropriately. 2 Tool use significantly improves model performance; for example, the OpenAI o3 model with external tools outperforms o3 alone by almost 5% on benchmarks like Humanities Last Exam (HLE) (EpochAI, 2025). In December 2025, at least 10,000 tool servers are operational, including meta tools like ‘a tool to search for tools’ to help LLMs find the exact one they need for the specific situation (Anthropic, 2025; Anthropic, 2025). This leads to a significant enough boost that companies now report benchmark performance separately - with and without tools.

Reasoning and Research #

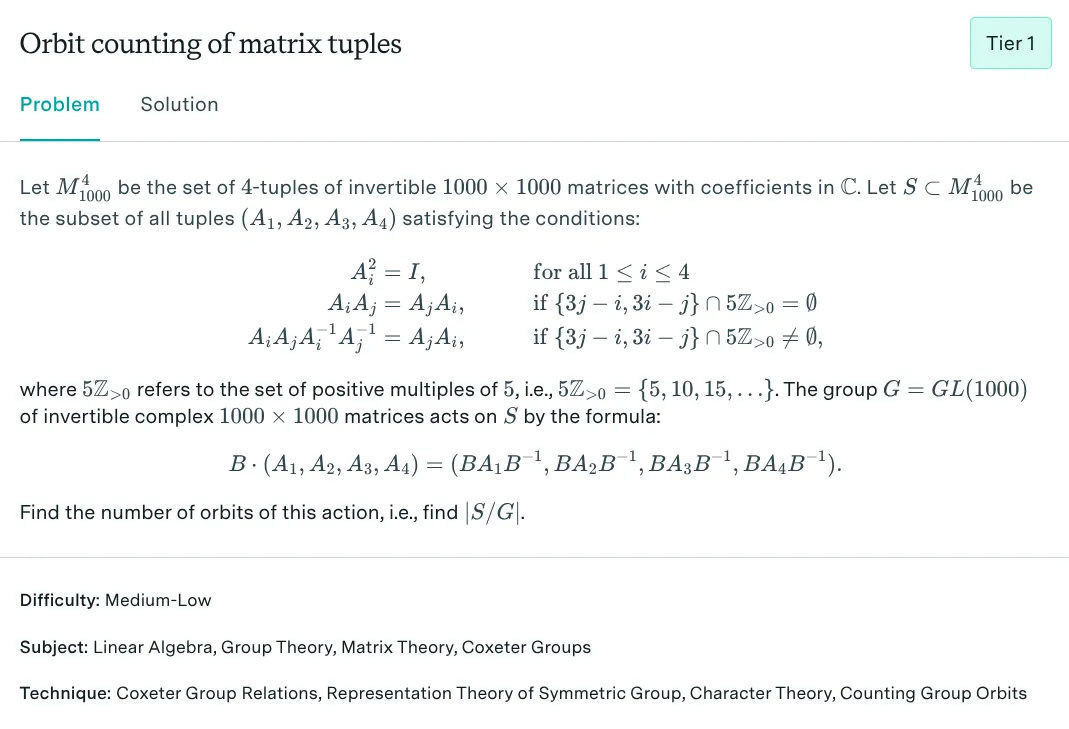

Models now demonstrate multi-step reasoning by working through problems step-by-step. In addition to using tools, LLMs now show their reasoning, catch their own errors, and backtrack when needed. In late 2024, OpenAI introduced o1, the first "reasoning" model. These AIs allocate more effort per problem—trading "thinking time" for accuracy. The longer they think, the better their responses tend to get (OpenAI, 2024). Using these reasoning techniques, both OpenAI and Google DeepMind achieved gold-medal performance at the 2025 International Mathematical Olympiad (OpenAI, 2025; Google DeepMind, 2025). On FrontierMath—a test of research-level mathematics—GPT-5.2 solved 41% of tier 1-3 problems (EpochAI, 2026).1 In ML terms, ‘thinking time’ is called ‘inference’. It is the act of generating new tokens/words, so increasing thinking time is more formally called inference time scaling. Similar to tool use this also led to such a boost in performance that companies report high thinking time (high compute) results of benchmarks separately than no thinking time (low compute) results.

LLMs can help generate and evaluate scientific hypotheses. Combining techniques like letting AI think for longer, and tools like web-search or specialized AI models, we are starting to see research assistants. As one example, Google introduced AI co-scientist in 2025. The team used it to generate and evaluate proposals for repurposing drugs, identifying drug targets, and explaining antimicrobial resistance in real-world laboratories (Google DeepMind, 2025). Others have attempted to build a fully autonomous AI scientist, which generates novel research ideas, writes code, executes experiments, visualizes results, describes its findings by writing a full scientific paper, and then runs a simulated review process for evaluation (SakanaAI, 2024).

AI is transitioning to an active research collaborator across scientific domains. Instead of only using language models, companies are also using approaches similar to AlphaZero to create a whole range of specialized models for scientific domains. For example, Demis Hassabis & John Jumper were awarded the nobel prize in chemistry for their work on building AlphaFold (Google DeepMind, 2024). This is a model that helped solve the long outstanding protein folding problem (Google DeepMind, 2022), and its successors AlphaFold 2 and 3 continue to aid thousands of researchers in biology (DeepMind, 2024). Similarly, AlphaGenome is helping us better understand human DNA (Google DeepMind, 2025). AlphaEvolve is helping generate faster algorithms for machine learning (Google DeepMind, 2025), and AlphaChip helps design the semiconductors that run algorithms (Google DeepMind, 2024).

Beyond just mathematics and scientific research, AI models are also developing more abstract intellectual skills. LLMs have some level of metacognition, they can evaluate the validity of their own claims and predict which questions they will be able to answer correctly (Kadavath, 2022). They have some knowledge about their own selves and their limitations. Similarly, they display the ability to attribute mental states to themselves and others (theory of mind). This helps in predicting human behaviors and responses (Kosinski 2023; Xu et al., 2024). We are going to talk more about how we concretely define and measure things like intelligence, meta-cognition and so on in later sections.

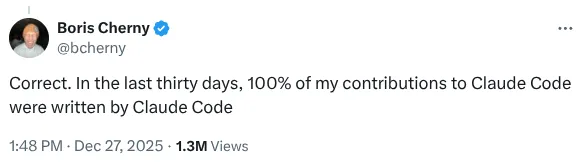

Software Development #

Coding is evolving from autocomplete to collaborative software development. LLMs can generate text in any form, and one particular type of text that they are proving to be especially good at is generating code. When paired with reasoning capabilities, and tools LLMs read documentation, edit codebases spanning thousands of files, run tests, debug failures, and iterate until tests pass—with increasingly minimal human guidance. In 2025 systems like Claude Opus 4.5, Gemini 3 Pro, and GPT-5.2 implement features and entire applications increasingly independently (Anthropic, 2025; Google DeepMind, 2025; OpenAI, 2025). When tested against real GitHub issues from open-source projects— in 2024 AI systems (Claude 3 Opus) could solve just 15% problems, but by 2025, this had jumped to being able to solve 74% of issues (Tools + Claude 4 Opus) (SWE bench, 2025).

Vision: Images and Video #

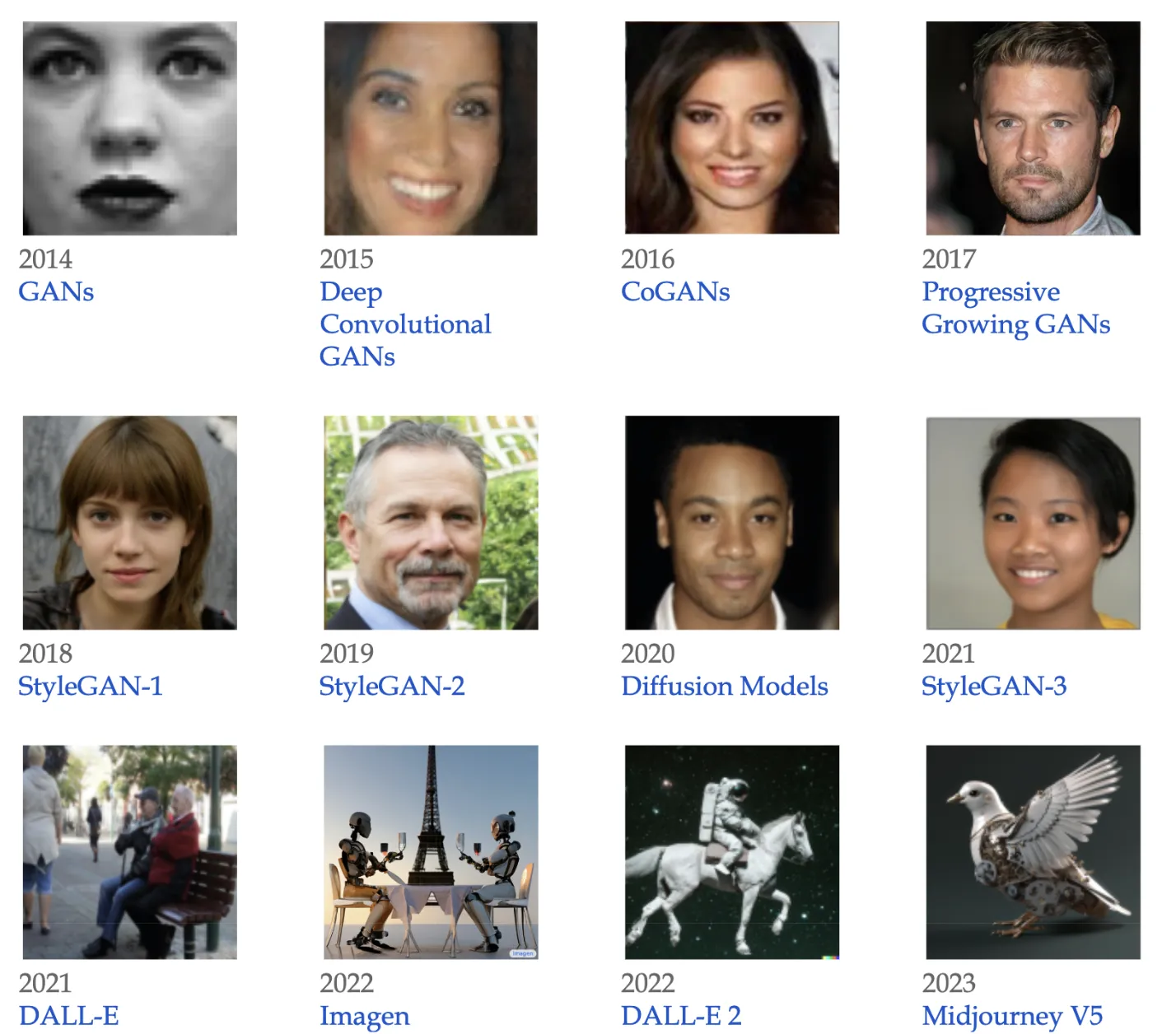

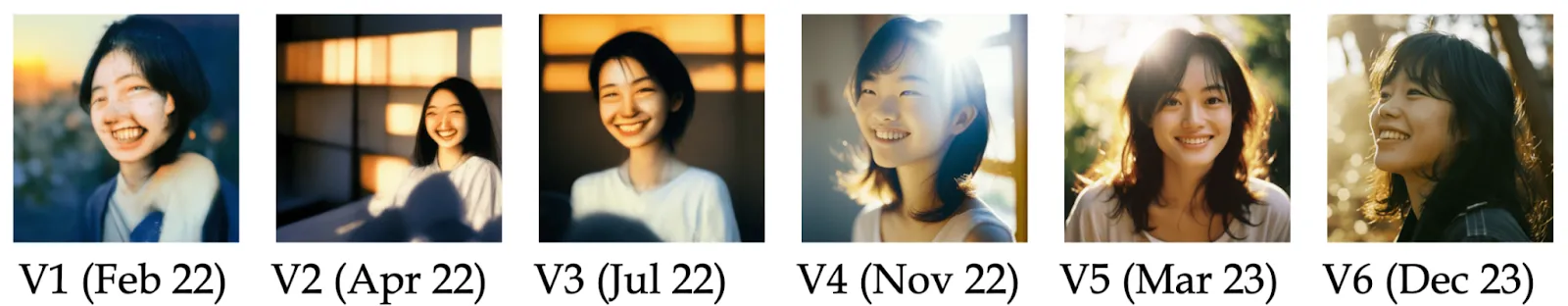

Image generation progressed from unrecognizable noise to photorealistic scenes in under a decade. In 2014, Generative Adversarial Networks (GANs) produced grainy, low-resolution faces (Goodfellow et al., 2014). By 2023, models generated detailed images from complex text prompts. Models like Midjourney v7 create photorealistic scenes nearly indistinguishable from professional photography. Video generation is following a similar trajectory. AI generated videos and DeepFakes are getting increasingly indistinguishable from real videos.

Large Multimodal Models (LMMs) combine language and image understanding capabilities. In 2025, multimodal models answer questions about spatial relationships in images, read embedded text in complex scenes, and extract information from charts and diagrams. Image models released in 2025 handle generation and sophisticated editing across modalities. Systems like Gemini 3 Pro Image work text-to-image, image-to-image, and handle complex editing—changing lighting, style, or composition while maintaining coherence (Google, 2025).

Robotics #

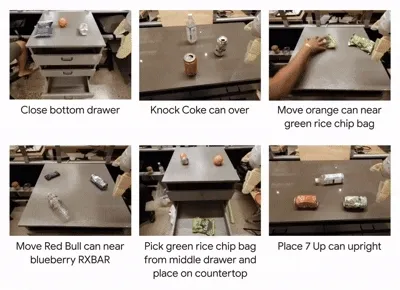

Both AI and robotics are evolving, with robots giving AI physical embodiment in the real world. Robotics is combining LLMs and visual models, to create robot control models (e.g. RT-1 or RT-2). These robots can use techniques borrowed from language models—like breaking complex actions into step-by-step plans—to control robot manipulators (Google DeepMind, 2024). They managed to learn behaviors like opening cabinets, operating elevators, and cooking tasks through observing human demonstrations. Robots have demonstrated the ability to perform intricate manipulation: sautéing shrimp, storing heavy pots in cabinets, and rinsing pans (Fu et al., 2024).

Autonomous robots are moving from research labs into real-world industrial deployment at significant scale. In 2023, China installed 276,300 industrial robots (AI Index Report, 2025). These systems handle welding, parts assembly, materials handling, and quality inspection—tasks requiring precision but not necessarily advanced reasoning. In addition to industrial robots, warehouse robotics represents one of the most mature deployments—Amazon operates over 1 million robots across its fulfillment network, handling everything from inventory storage to package sorting (Amazon, 2025). Robots in warehouses and industry are able to handle packages, speed up inventory identification using machine vision, and autonomously unload shipping containers.

Footnotes

-

KataGo is a system which is based on techniques used by DeepMind's AlphaGo Zero and similarly superhuman in its game play. In 2022, researchers managed to demonstrate that despite being superhuman KataGo can be beaten by humans and demonstrates "surprising failure modes" of AI systems. This is the kind of thing that will be a repeated theme throughout our text (Wang and Gleave et al., 2022)

↩ -

Standards like the Model Context Protocol (MCP) are formalizing how AI assistants connect to data repositories and development environments (Anthropic, 2024). The MCP protocol has been donated to the Linux foundation(Anthropic, 2025).

↩

Was this section useful?

Thank you for your feedback

Your input helps improve the Atlas.