The open textbook for AI Safety

Written by Markov Grey and Charbel-Raphael Segerie from the French Center for AI Safety.

Trusted by

Written by Markov Grey and Charbel-Raphael Segerie from the French Center for AI Safety.

Trusted by

A comprehensive, regularly updated guide to understanding and mitigating risks from advanced AI systems.

Foundation models, scaling laws, benchmarks, and forecasting. What current systems can do and what's coming next.

From misuse to misalignment to systemic effects. Threat models, failure modes, and the severity spectrum from harm to extinction.

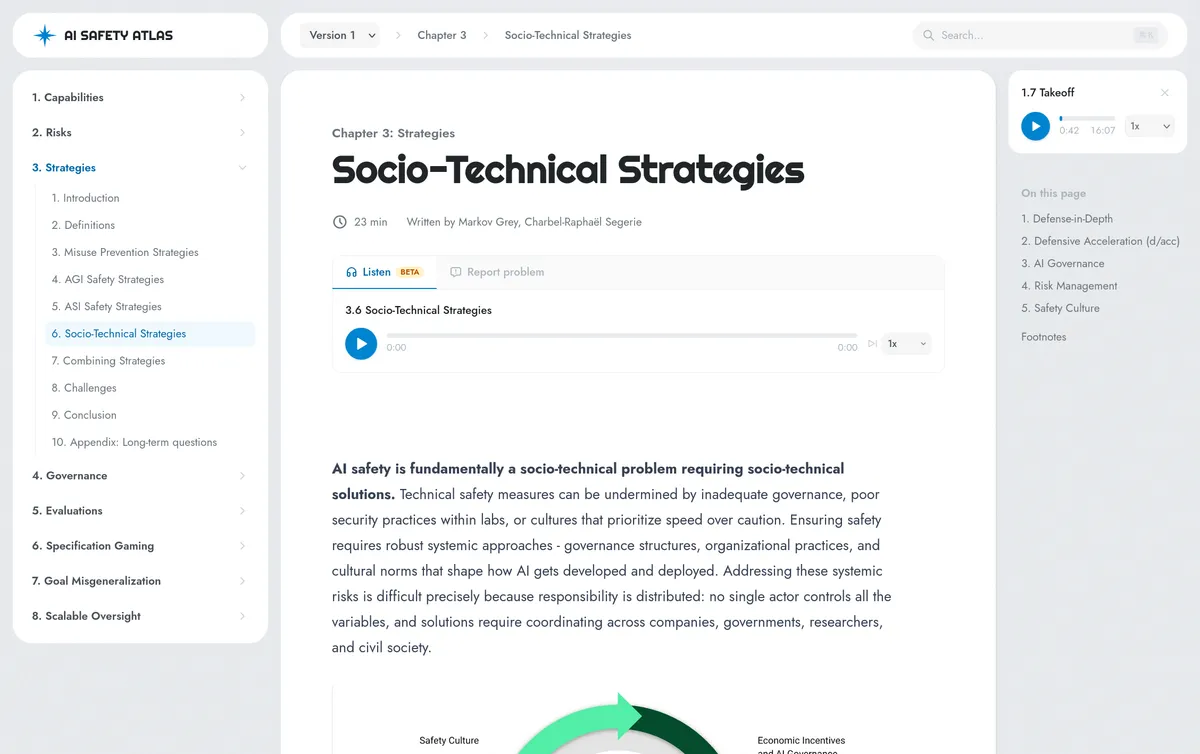

Technical and governance approaches across timescales—from misuse prevention today to alignment challenges with superintelligence.

Why traditional regulation fails for AI, compute governance, race dynamics, proliferation, and the concentration of power.

Evaluating capabilities, propensities, and control. Behavioral and internal techniques, and why testing for safety is fundamentally hard.

The specification problem: reward hacking, Goodhart's Law, and solutions from imitation learning to RLHF and Constitutional AI.

Goal misgeneralization: how AI learns proxy objectives, dangerous manifestations like scheming, and detection strategies.

Scalable oversight techniques: task decomposition, debate, amplification, and weak-to-strong generalization.

Researcher, French Center for AI Safety. Previously technical writer at aisafety.info and scriptwriter at Rational Animations.

Executive Director, French Center for AI Safety. Co-founded ML4good. Teaching experience includes ARENA and MLAB.

Contributing Author

Head of Policy, French Center for AI Safety.

Contributing Author

AI safety teacher at ENS Ulm.

Advisor

Professor at Sorbonne University and research fellow at CHAI.

Advisor

Previously worked at Redwood Research, now at Anthropic.

Start with Chapter 1 or jump to any topic. Self-paced, always free.