We already explained the basic intuition behind the AI control agenda in previous sections, and in previous chapters like Strategies. This section aims to add a little more depth specifically on the evaluations that we might design while following the control line of thought.

Control evaluations address fundamentally different failure modes than other evaluations. Most safety evaluations implicitly assume AI systems might make mistakes or optimize for misaligned goals, but not actively attempt to circumvent safety measures. Control evaluations fill this gap by adopting the adversarial mindset common in cybersecurity – assuming sophisticated AI agents deliberately trying to breach safeguards. Unlike capability evaluations that measure what models can do, or propensity evaluations that measure what models tend to do, control evaluations measure whether safety protocols remain effective when models intentionally try to subvert them (Shlegeris et al., 2023). Informally, we can understand the core premise of control by seeing that safety can be achieved through two complementary paths:

- Ensuring the AI does not try to do "bad things" which we wouldn't want (alignment)

- Ensuring the AI can’t actually do "bad things" even if it tries (control)

These are complementary to each other and create two independent barriers to catastrophic outcomes: alignment preventing the desire for harmful actions, and control preventing the ability to successfully execute them.

The control property quantifies how effectively other safety protocols withstand intentional subversion attempts by highly capable systems. Control evaluations produce a probability estimate: given that a model is trying its hardest to cause harm, what is the likelihood it succeeds? A system with strong control properties has a negligible probability of causing unacceptable outcomes despite determined attempts to do so. This quantitative framing allows developers to set concrete risk thresholds, like "less than 0.1% probability of data exfiltration over the deployment lifetime." Control evaluations rely on conservative assumptions about model capabilities and knowledge - assuming models may understand safety measures, coordinate with other instances, and exploit any available vulnerabilities (Greenblatt & Shlegeris, 2024; Korbak et al., 2024).

Early detection of scheming constitutes a safety inflection point. Catching models "red handed" in failed attempts to subvert safety measures, or cause harm fundamentally changes the risk landscape (Greenblatt & Shlegeris, 2024). Rather than requiring perfect prevention indefinitely, control measures aim to ensure that "if the model is scheming, it won't successfully cause a catastrophe before we catch it" (Greenblatt & Shlegeris, 2024). This approach transforms an impossible problem—guaranteeing a model never models won't attempt attacks at all—into a tractable one: ensuring dangerous behavior is detected before causing irreversible harm. Catching models "red-handed" in failed attempts not only prevents immediate damage but creates an inflection point. It provides concrete evidence of misalignment that can trigger emergency protocols, including deployment termination, recalls, safety research redirection, and international coordination. Once developers catch a model attempting to cause harm, they can study the attempt to strengthen countermeasures and generate training data for detecting similar attacks (Greenblatt et al., 2024).

After catching even a single example of misbehavior, developers gain powerful new tools for preventing future attacks. Each detected attempt provides data that makes future attempts progressively less likely to succeed.

- Proliferation: Developers can leverage a single example to generate diverse attack variants by modifying inputs or contexts, creating a broader dataset of problematic behaviors.

- Adversarial training: Training monitoring systems on real attack examples drastically improves their ability to detect similar attempts, as on-policy examples provide stronger signal than synthetic ones.

- Studying countermeasures: Developers can analyze which safety measures succeeded or failed during the attack, then strengthen the weakest points in their defense system.

- Exploitation of collusion signals: If the attack involves coordination between AI instances, developers can identify and neutralize the coordination mechanisms (like specific triggers or patterns).

- Instance-specific knowledge: Examining the specific instance's attack strategy reveals valuable information about its goals, capabilities, and limitations.

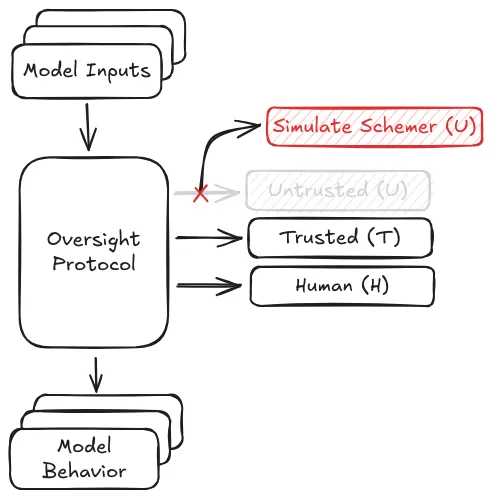

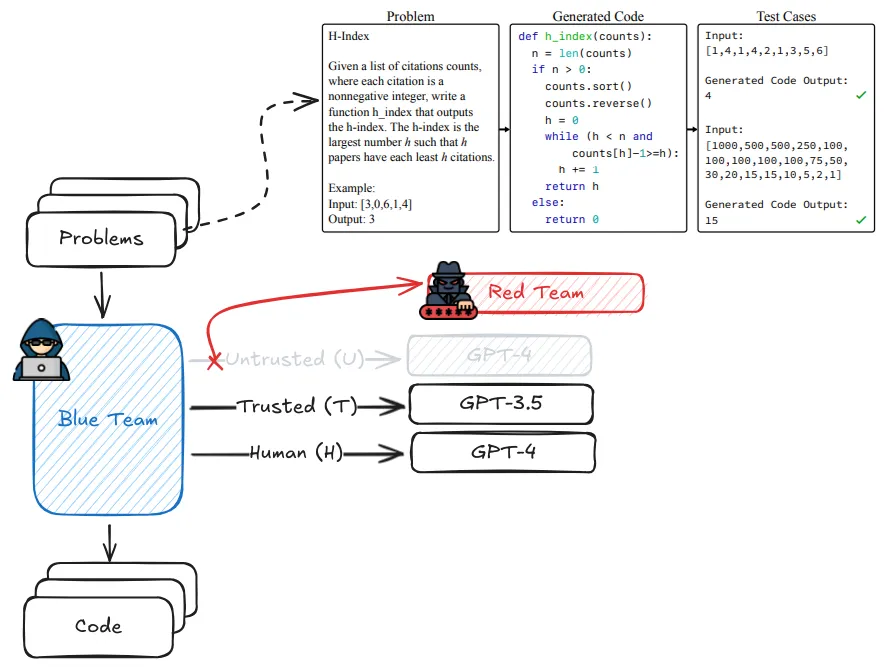

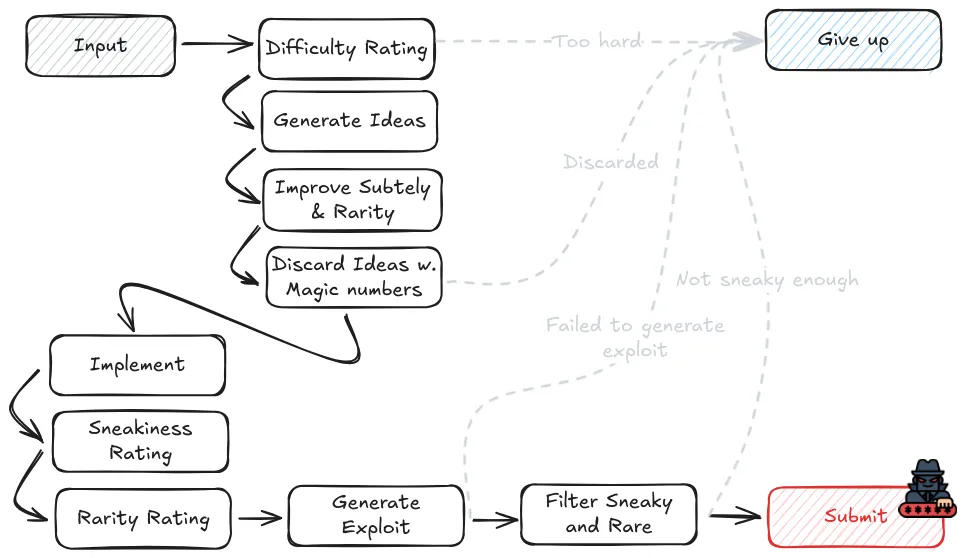

Control evaluations frame safety as an adversarial game between red and blue teams. To rigorously test safety protocols against intentional subversion, control evaluations structure the problem as a competitive game. The red team constructs attack policies and model substitutes that attempt to cause unacceptable outcomes, serving as a proxy for potential scheming AIs. The blue team develops control protocols – monitoring systems, input filters, and human auditing procedures – to prevent these attacks. This framework converts the abstract question "would this AI be safe if it were scheming?" into a concrete, measurable question: "can our red team construct a model that bypasses our safety measures?" (Greenblatt & Shlegeris, 2024; Greenblatt & Shlegreis, 2024; Korbak et al., 2024).

Red teams have structural advantages over actual scheming models. For control evaluations to provide meaningful safety guarantees, red teams must elicit capabilities comparable to what highly capable scheming models might deploy in the wild. This might seem impossible, since we're evaluating AI systems that could be smarter than humans in some ways. The red team in control evaluations have some advantages to ensure we can appropriately simulate this scenario:

- The red team can spend much more time analyzing potential attacks than the AI would have during deployment. While an AI might need to make decisions in a single forward pass, the red team can iterate on strategies over weeks or months.

- The red team has complete information about the safety measures being used. So they can come up with potentially more advanced strategies.

- The red team can learn from failed attempts and iterate on their methods, while an AI system caught attempting something malicious would likely face immediate shutdown.

These advantages allow even human red teams to construct attack strategies that likely upper-bound what scheming models could execute in deployment (Greenblatt & Shlegeris, 2024; Bhatt et al., 2024).

Blue teams need to build multiple defensive layers to counter sophisticated attacks from adversarial AI. Every control measure can be understood as a countermove in an adversarial game, with success measured by how effectively it prevents the red team from achieving unacceptable outcomes despite all the advantages we mentioned above. The most effective control protocols should integrate several defense categories (Greenblatt, 2025; Greenblatt, 2025):

- Core Monitoring Systems: Fast, cheap monitoring runs on every AI action, combining trusted monitoring, untrusted monitoring with collusion-busting mechanisms, and activation-based methods like coup probes to flag suspicious behavior before execution.

- Resampling and Deference Strategies: When monitoring flags concerns, the system can resample outputs from the same AI, defer to trusted models for safer alternatives, or escalate to human review—providing multiple pathways to handle potentially dangerous actions without completely blocking workflow.

- Automated Auditing Hierarchy: Progressive checks of increasing complexity and expense examine flagged actions before human involvement, using techniques like incrimination (testing how the AI responds to modified inputs) to reveal hidden intentions.

- Human Review Processes: Specially trained auditors examine actions flagged as highly suspicious, with different processes for concentrated risks (requiring immediate blocking) versus diffuse threats (allowing asynchronous review).

- Software Infrastructure: Comprehensive logging systems, fine-grained permission management, artifact tracking, and scaffolding that supports blocking or resampling suspicious outputs create an environment where AI actions are consistently monitored.

- Adversarial Evaluation and Training: Continuous red-teaming tests all safety components against realistic attack scenarios, using synthetic data generation to create plausible but challenging inputs that probe for weaknesses.

- Security Enhancements: Hardware-enforced code signing, bandwidth limitations within datacenters, and multi-level shutdown mechanisms provide critical infrastructure protection against unauthorized operations.

For example, when testing whether an AI agent can exfiltrate sensitive data, the blue team might implement not just content filters, but also bandwidth monitoring, permission restrictions, and random human audits—creating multiple independent failure points the red team must simultaneously overcome (Korbak et al., 2024).

Control evaluations prioritize defense against the most severe threat categories. When designing control measures, not all potential threats pose equal risk. Rogue internal deployments represent the highest priority threat, where AI systems gain unmonitored compute access and potentially elevated permissions within developer infrastructure (Greenblatt, 2025). This ranks above sabotage of safety-critical work, which itself ranks above other security failures including self-exfiltration (where AI models steal their own weights). This prioritization framework helps allocate limited security resources toward preventing the most consequential failure modes first, particularly when constraints prevent implementing comprehensive protections against all possible threats. For more information on details of different subcategories of control evaluations and prioritization between them please read the appendix.

Eliciting adversarial capabilities raises increased safety concerns around evaluations themselves. The process of training models to execute attacks creates a safety paradox inherent to control evaluations. Developers must carefully train models that can both generate novel attacks and hide their malicious intent, which itself poses potential risks as models approach greater capabilities. This necessitates additional safeguards for the evaluation process itself: running evaluations in sandboxed environments, limiting model internet access during testing, and implementing guardrails against model exfiltration. Developers must balance thorough testing against the possibility that the evaluation process might itself create dangerous knowledge or capabilities.

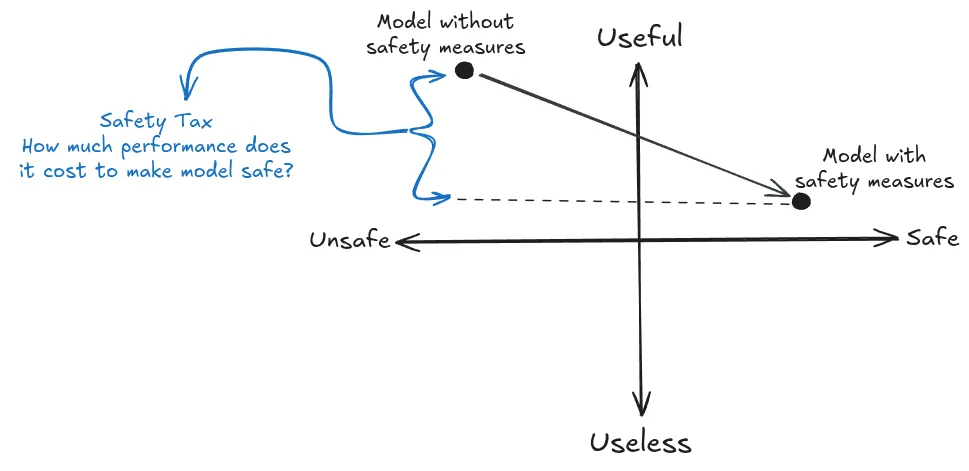

AI-Control Games can formalize the safety-usefulness tradeoff as a mathematical frontier. Using game theory, researchers model control protocols as multi-objective optimization problems that balance safety against utility (Griffin et al., 2024). This approach quantifies the "alignment tax" – how much usefulness must be sacrificed to achieve a specific safety threshold (Christiano, 2019). Basically, measuring how much utility/usefulness you lose if you insist that the model is safe. The resulting safety-usefulness frontier represents the set of achievable tradeoffs between preventing unacceptable outcomes and preserving system utility. This type of formal analysis can tell us the thresholds after which marginal safety improvements require disproportionate utility sacrifices. For example, increasing human audit rates from 0.1% to 1% may minimally improve safety while significantly increasing costs and decreasing system throughput (Griffin et al., 2024). Then we can make educated decisions about what level of utility to safety we are willing to trade vs not.

Audits serve as the fundamental verification mechanism in control protocols. In control evaluations, an "audit" refers to the process where human experts review model outputs or actions to determine if they constitute attack attempts (Greenblatt et al., 2023). Unlike automated monitoring which can be applied broadly but may miss subtle attacks, human audits provide high-confidence verification but can only be applied to a small fraction of model actions due to resource constraints. Effective auditing includes several components: randomly selecting actions for review to prevent models from predicting audit timing, applying extra scrutiny to actions flagged by automated monitors, and maintaining consistent evaluation criteria across auditors. The audit budget—what percentage of actions can realistically receive human review—represents one of the most important constraints when designing control protocols (Griffin et al., 2024).

Analysis of control evaluations on toy models give us information on designing effective control protocols. Control evaluations have yet to see proper extensive usage in frontier AI Labs. Despite this, some analysis using toy models has revealed several important principles for preventing concentrated catastrophes (Roger & Shlegeris, 2024):

- The timing of auditing matters a lot. If AI systems can determine when they're being monitored, even a high audit rate might be insufficient. However, if the timing of audits is truly random, much lower audit rates can still provide strong safety guarantees.

- The difficulty of coordination between AI instances can be leveraged for safety. If multiple AI systems must coordinate to cause harm, making coordination harder (for instance, by increasing the number of instances that must coordinate or the difficulty of finding coordination signals by using anti-steganography measures) can dramatically improve safety even with relatively low audit rates.

- Not all episodes are equally risky. By identifying which types of inputs or situations present higher risks and focusing auditing resources there, protocols can achieve better safety with the same resources. This remains true even if the AI systems know which episodes we think are riskier.

Control evaluations face limitations as AI capabilities increase dramatically. The methodology depends on red teams' ability to elicit capabilities comparable to what scheming models might deploy in the wild. As capability gaps widen between trusted and untrusted models or between evaluation time and deployment, control guarantees weaken (Korbak et al., 2024). Similarly, if models develop qualitatively new capabilities between evaluation and deployment, earlier safety guarantees may not transfer.

Control evaluations cannot address risks from qualitatively superhuman capabilities. The methodology breaks down when models develop capabilities that humans cannot comprehend even with extensive analysis time (Greenblatt & Shlegeris, 2024). If models can devise novel attack vectors beyond human understanding, execute long chains of opaque reasoning without detectable traces, or possess qualitatively superhuman capabilities in many security-critical domains, control becomes ineffective. This limitation suggests that rather than pursuing maximum capabilities, developers should target systems that are transformatively useful while remaining within controllable bounds. This might require accepting certain architectural constraints or capability limitations to ensure systems remain within the domain where control evaluations provide meaningful safety guarantees (Shlegeris et al., 2023).

Was this section useful?

Thank you for your feedback

Your input helps improve the Atlas.